What happens when the video device is actively conscious?

The rapid development of artificial intelligence has brought great changes to people's lives, and surveillance systems are one of them. Traditional monitoring equipment can only serve as evidence in court, and it takes a lot of labor costs. With the introduction of artificial intelligence technologies such as basic research, computing power and training data sets, the application scenarios of monitoring equipment are becoming more and more abundant. . This article takes the practical results of IC Realtime and Boulder AI as examples, and elaborates on the prospects, problems and thinking of AI monitoring equipment. Let’s take a look.

The following is the translation:

Surveillance cameras are commonly referred to as digitized eyes through which people can watch various video programs, watch live videos, and more. Of course, most surveillance cameras are passive – they are placed there as a deterrent, or provide evidence when problems arise, such as a car being stolen? Then check CCTV.

But things have changed a lot now, and artificial intelligence allows surveillance cameras to match the digital brain, allowing them to analyze live video on their own without the help of humans. This may be good news for public safety, helping police and emergency personnel find crimes and accidents more easily, and can bring a range of scientific and industrial applications. But this also raises serious thinking about future privacy issues and brings new risks to social justice.

Imagine that when the government can track a large number of users watching CCTV, when the police can digitize your city movements by uploading your photos to the database, or when the police don’t like the expression of a particular group of people, When running a biased algorithm on the camera in the mall... What happens in these cases?

AI monitoring starts with search video

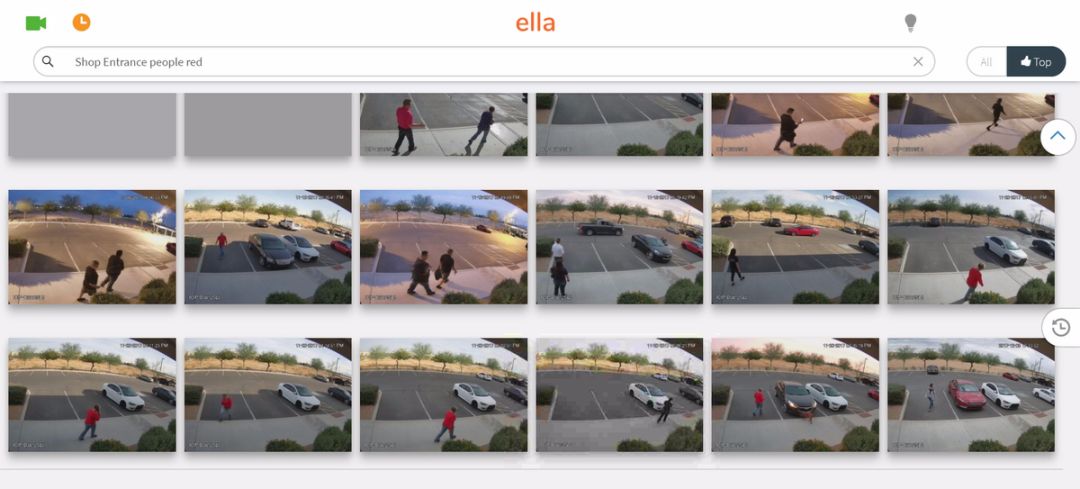

Although there are still some ways to go about the implementation of these scenarios, we have already seen the first application results that combine artificial intelligence and monitoring. IC Realtime is an example. In December of last year, IC Realtime's flagship product was hailed as Google in the field of closed circuit television. This is an application and web platform called Ella that uses AI to analyze what's happening in a video feed and to search instantly. Ella recognizes thousands of natural language queries and provides users with a wealth of search material, such as finding specific clip videos, people wearing a certain color of clothing, and even the brand and model of a personal car.

In a web demo, IC Realtime CEO Matt Sailor demonstrated the functionality of Ella, which connected surveillance cameras from about 40 industrial parks and carried out "a man in red", "UPS truck", "police car" "A variety of search attempts - all of which returned the relevant footage in a matter of seconds."

"If there is a robbery, but you still don't realize what happened," Sailor said. "But there is a jeep that happens to pass, then we search for "Jeep" in Ella, and the screen shows the different jeep passing by. Scenario - This will be the first major combination of artificial intelligence and closed circuit television." Running on Google Cloud, Ella can search for shots from almost any CCTV system. Sailor believes that the application of this technology makes it easy for you to find what you are looking for, without having to screen a few hours of video there.

IC Realtime has achieved great success in the "smart" home security camera market produced by Amazon, Logitech, Netgear, Google's Nest and other companies.

Use Ella to search for people in red

“We are now 100% accurate in Idaho.â€

While IC Realtime provides cloud-based analytics that can upgrade existing point-and-shoot cameras, other companies are more likely to build artificial intelligence directly into the hardware—one of the big advantages of integrating AI into devices is that they Working without an internet connection, Boulder AI is a startup that uses its own stand-alone AI camera to sell “Vision as a Service†and can tailor a machine vision system to individual customers.

Its founder, Darren Odom, gave an example of a customer who built a dam in Idaho. In order to comply with environmental regulations, they are monitoring the amount of fish movement. Odom said: "At first they were sitting on the window sill and watching how many squid swam. Later they switched to surveillance video and the remote person observed it." Finally, they contacted Boulder AI to help them establish. A custom artificial intelligence closed circuit television system to identify fish. Odom is proud to say: “We did use computer vision to identify fish identification, and now the accuracy of salmon identification in the Idaho region is as high as 100%.â€

IC Realtime represents the general-purpose terminal market, and Boulder AI represents the contractor market, but in both cases, these companies are currently providing only the tip of the iceberg. Just as machine learning has made rapid progress in recognizing objects, key components of creating artificial intelligence, such as basic research, computing power, and training data sets, are in place, and skills for analyzing scenes, activities, and movements are expected to increase rapidly. The two major data sets for video analytics are produced by YouTube and Facebook, and they also want artificial intelligence to help them optimize more content on the platform. For example, YouTube's dataset contains more than 450,000 hours of tagged video, hoping to stimulate "innovation and advancement in video understanding." Google, MIT, IBM, and DeepMind are all involved in similar projects.

Currently, IC Realtime is already developing advanced tools such as face recognition, and Boulder AI is also exploring this advanced analysis technology.

The biggest obstacle is still: low resolution video

For experts in surveillance and artificial intelligence, the introduction of these features has some technical and ethical potential challenges. Like AI, these two categories are intertwined—it's a technical issue, machines can't understand the world like humans, but when we let them make decisions for us, it becomes a moral issue.

Although artificial intelligence has made great progress in the field of video surveillance in recent years, there are still fundamental challenges in computer understanding of video. For example, a trained neural network can analyze human behavior in a video by subdividing the human body into multiple parts: arms, legs, shoulders, head, etc., and then observing these small characters. A change from one frame of video to another. From this perspective, AI can tell you if someone is running - but it depends on the video resolution you have.

This is a big problem for closed-circuit systems, because the angles at which cameras tend to darken are often weird. Take the convenience store camera as an example. The convenience store is for the cash register, but it also ignores the windows facing the street. If a robbery happens outside and the camera is blocked, the AI ​​will not work.

Artificial intelligence monitoring system built by Chinese company SenseTime

Similarly, although artificial intelligence can well identify related events in a video, it still cannot extract important up and down scenes. Take the neural network that analyzes human behavior as an example. You might see the lens showing "This person is running," but it can't tell you if they are really running because they might have missed a bus or because they stole Someone's phone.

These accuracy issues are worthy of our reflection, and the computer is far from the insights of humans and what is seen in the video. However, the advancement of technology is fast. Use license plates to track vehicles, identify items such as cars and clothing, and automatically track a person between multiple cameras—these recognition scenes are already very solid.

There are still many AI surveillance problems that need to be solved.

However, even these very basic scene applications can produce powerful effects. According to a report by the Wall Street Journal, in Xinjiang, traditional surveillance and folk surveillance methods combined with facial recognition, license plate scanners, iris scanners, and ubiquitous closed-circuit systems have created a “full surveillance state†that enables Track anyone in public places; in Moscow, a similar infrastructure is being assembled to insert facial recognition software into a centralized system that houses more than 100,000 high-resolution cameras that cover more than 90% of the city's apartments Entrance.

This situation may lead to a virtuous cycle. As the software gets better and better, the system collects more data to help the software get better.

These systems are really working, but there are still many issues to be solved, such as algorithmic bias. Studies have shown that machine learning systems can absorb racial and gender discrimination, from image recognition software for women in the kitchen to criminal justice systems that always say that blacks are more likely to commit crimes. If we use old video to train artificial intelligence monitoring systems, such as cameras from CCTV or police agencies, the prejudice that exists in society is likely to continue.

Jay Stanley, senior policy analyst at ACLU, said that even if we can resolve prejudice in these automated systems, it will not make them benign, because changing CCTV cameras from passive to active may have a huge negative impact on civil society. .

"We want people not only to be free, but also to be more free."

We hope that people will not only be free but also feel more comfortable. This means that they don't have to worry about the unknown, invisible "audience" to explain or misinterpret each of their actions and words - it will not have negative consequences for their lives.

In addition, false alarms monitored by artificial intelligence may also lead to increased conflicts between law enforcement and the public. For example, in the shooting incident of the Denver razor, a police officer saw the pill gun used for pest control as a razor, resulting in a manslaughter. If a person can make such a mistake, how can the computer avoid it? Moreover, if the monitoring system becomes automated, such errors will only become more common.

When AI supervision becomes more and more popular, who will manage these algorithms?

In fact, what we see in this area is only part of the trend of artificial intelligence applications. In this trend, we use these relatively crude tools to try and classify people, but the accuracy of the results is questionable.

“What makes me uneasy is that many of these systems are being applied to our core infrastructure, but there is no democratic process to measure the validity and accuracy of the problem.†The algorithm does provide embedded culture and history. The pattern recognition type extracted from the biased data, but also inevitably brings the problem of artificial intelligence monitoring being abused.

For this problem, IC Realtime gives a common answer in the technology industry: these technologies are value-neutral, because any new technology is likely to fall into the danger of unscrupulous people, which cannot be avoided, but it brings The value is definitely higher than its shortcomings.

Solar Panel Grounding Wire Size,Solar Grounding Wire Size,Solar Panel Ground Wire,Pv Grounding Wire

Sowell Electric CO., LTD. , https://www.sowellsolar.com