In the 2015-2016 machine learning platform open source tide, the United States is a well-deserved leader: whether it is Internet giants such as Google, Amazon, Microsoft, IBM, or major research institutes in the United States, contributing a wide variety of machine learning tools to the open source world. . There are many Chinese figures, such as Jia Yangqing who developed Caffe.

But on the mainland side, whether it is BAT or academic circles, it is always a shame in the open source machine learning project. This is inconsistent with the status of the world's largest AI researcher community and the status of the rivers and lakes that occupy half of the AI ​​research field. The good news is that in the second half of 2016, Baidu and Tencent have released their open source platform strategy. Although it is late, but as the players entering the second half, what is the significance of their open source platforms? In this article, as the third bomb of the machine learning open source project inventory, we will work with you to look at the four domestic open source projects including Baidu Tencent platform.

Note: According to the statistics of Vice President Wang Yigang of the Innovation Workshop Artificial Intelligence Engineering Institute, the number of AI articles and citations in China ranks first in the world, accounting for more than half of the world total.

1. Baidu: I hope to get the "PaddlePaddle" favored by developers.

At the Baidu World Congress on September 1, 2016, Baidu Chief Scientist Wu Enda announced that PaddlePaddle, a heterogeneous distributed deep learning system developed by the company, will be open to the public. This marks the birth of the first machine learning open source platform in China.

In fact, the development and application of PaddlePaddle has been around for a while: it originated from the “Paddle†created by Baidu Deep Learning Lab in 2013. At that time, most of the deep learning frameworks only supported single GPU computing. For organizations like Baidu that need to process large-scale data, this is obviously not enough, which greatly slows down the research speed. Baidu urgently needs a deep learning platform that can support multi-GPU and multi-machine parallel computing. This led to the birth of Paddle. Since 2013, Paddle has been used by R&D engineers within Baidu.

The core founder of Paddle, Xu Wei, a researcher at Baidu Deep Learning Lab, is now the head of the PaddlePaddle project.

Xu Wei

By the way, the naming of "Paddle" to "PaddlePaddle" has an episode: Paddle is an abbreviation for "Parallel Distributed Deep Learning," meaning "parallel distributed deep learning." When it was released last September, Wu Enda thought that "PaddlePaddle" (English means boating - "Let's sway double ~ Ang ~ paddle, the boat pushes the waves away..."). In fact, it is more sloppy, better remember So there is such a lovely name.

So, what are the characteristics of PaddlePaddle?

Supports multiple deep learning models DNN (Deep Neural Network), CNN (Convolutional Neural Network), RNN (Recurrent Neural Network), and complex memory models such as NTM.

Based on Spark, it is highly integrated with it.

Support for Python and C++ languages.

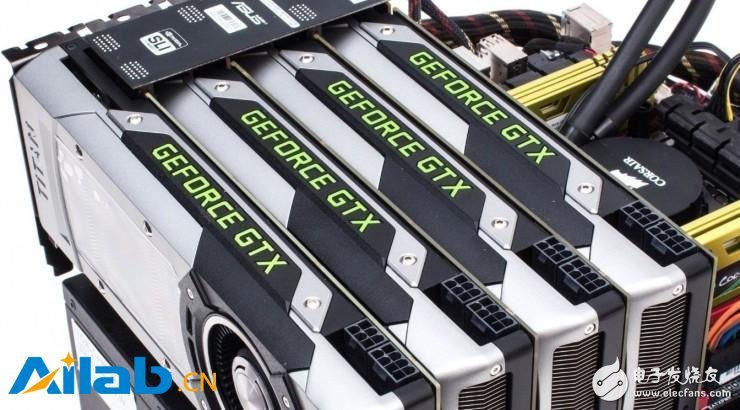

Support for distributed computing. As its original design, this allows PaddlePaddle to perform parallel computing on multiple GPUs and multiple machines.

What advantages does PaddlePaddle have for developers compared to existing deep learning frameworks?

First of all, it is ease of use.

Compared to the underlying Google TensorFlow, the characteristics of PaddlePaddle are obvious: it allows developers to focus on building high-level parts of the deep learning model. Project leader Xu Wei introduced:

"With the help of PaddlePaddle, the design of the deep learning model is as easy as writing pseudo code. The designer only needs to pay attention to the high-level structure of the model without worrying about any trivial underlying problems. In the future, programmers can quickly apply the deep learning model to solve Practical problems such as medical care and finance make artificial intelligence play the most important role."

Aside from the underlying encoding, the functionality required in TensorFlow to implement several lines of code may only require one or two lines in PaddlePaddle. Xu Wei said that machine translation programs written in PaddlePaddle require only a quarter of the code of the "other" deep learning tools. This obviously takes into account the vast number of newcomers in the field, reducing the threshold for developing machine learning models. The immediate benefit of this is that developers are more likely to use PaddlePaddle.

Second, it is faster.

As mentioned above, the code on PaddlePaddle is more concise, and using it to develop the model obviously saves developers some time. This makes PaddlePaddle ideal for industrial applications, especially those that require rapid development.

In addition, since its inception, it has focused on leveraging the performance of GPU clusters to accelerate parallel computing in distributed environments. This makes AI training and reasoning with large-scale data on PebblePebble much faster than platforms like TensorFlow.

Speaking of this, what do you think of PaddlePaddle in the industry?

The first thing I have to mention is Caffe. Many senior developers think that PaddlePaddle's design philosophy is very similar to Caffe. It is suspected that Baidu has developed a replacement for Caffe. This is somewhat similar to the alternative relationship between Google TensorFlow and Thano.

Knowing that Caffe's founder Jia Yangqing commented on PaddlePaddle:

"Very high quality GPU code"

"Very good RNN design"

“The design is very clean, there are not many abstracTIons, which is much better than TensorFlowâ€

“The design idea is a bit oldâ€

“The overall design feel and Caffe's heart is clear, while solving some of the problems in Caffe's early designâ€

Finally, Jia said that PaddlePaddle's overall structure is very deep, and it is a work of effort. In this regard, it has won the general recognition of developers.

To sum up, the industry's overall evaluation of PaddlePaddle is "clean design, simple, stable, fast, and less memory."

However, with these advantages, there is no guarantee that PaddlePaddle will have a place in the open source world of machine learning. Some foreign developers say that the biggest advantage of PaddlePaddle is fast. However, there are many open source frameworks faster than TensorFlow: MXNet, Nevon of Nervana System, and Veles of Samsung, all of which are well supported for distributed computing, but not as popular as TensorFlow. Among them, TensorFlow's huge user base is also due to the blessing of Google's own AI system.

How much help Baidu's AI products can contribute to the popularity of PaddlePaddle remains to be seen. We are aware that it has been applied to a number of businesses under Baidu. Baidu said:

“PaddlePaddle has played a huge role in Baidu's 30 major products and services, such as estimated take-out time for take-out, pre-judgment of network disk failure time points, accurate recommendation of users, information, mass image recognition classification, characters Identification (OCR), virus and spam detection, machine translation and autopilot."

Finally, let's take a look at the PaddlePaddle launched by the company. What does Li Yanhong say:

"After five or six years of accumulation, PaddlePaddle is actually the engine of Baidu's deep learning algorithm. It opens up the source code and allows the students to let all the young people in the society learn and improve on the basis of it. I believe They will use their creativity to do a lot of things that we never even thought about."

2. Tencent: “Angel†for the enterprise

In 2016, Goose Factory launched a series of big moves in the AI ​​field:

In September, the AI ​​Lab was established.

In November, won the championship of the Sort Benchmark competition

On December 18th, the existence of “Angel†was announced at the Tencent Big Data Technology Summit and KDD China Technology Summit, and it was revealed that it was the angel behind the Sort Benchmark champion.

In the first quarter of 2017, the Angel source code was opened.

Angel will be the second heavyweight open source platform released by BAT after PaddlePaddle. So, what exactly is it?

Simply put, Angel is a distributed computing framework for machine learning, jointly developed by Goose Factory and Hong Kong University of Science and Technology, Peking University. Tencent said it provides solutions for enterprise-scale large-scale machine learning tasks that are well compatible with industry-leading deep learning frameworks such as Caffe, TensorFlow and Torch. But as far as we know, it is not a machine learning framework itself, but rather a data operation.

At the press conference on the 18th of last month, Jiang Jie, the chief data expert of Tencent, said:

"In the face of Tencent's rapidly growing data mining needs, we hope to develop a high-performance computing framework for machine learning that can cope with very large data sets, and it is friendly enough to users and has a very low threshold for use. In this way, the Angel platform came into being."

Among the keywords, one is “large†size data and the other is “low†usage threshold.

On the "big" side, Penguin said that Angel supports model training in the billion-level dimension:

“Angel uses a variety of industry-leading technologies and Tencent's proprietary R&D technologies, including SSP (Stale synchronous Parallel), asynchronous distributed SGD, multi-threaded parameter sharing mode HogWild, network bandwidth traffic scheduling algorithm, computation and network request pipelining, and parameter update indexing. And training data pre-processing schemes, etc. These technologies have greatly improved Angel performance, reaching several times to tens of times of Spark, and can operate under the feature dimension of 10 million to 1 billion."

On the "low" side, Angel doesn't use Python as standard in the machine learning world, but uses the most familiar Java in the enterprise world, as well as Scala. Penguin stated: "In terms of system usability, Angel provides a rich library of machine learning algorithms and highly abstract programming interfaces, automatic schemes for data calculation and model partitioning, and parameter adaptive configuration. At the same time, users can use MR and Spark. Programming on Angel, we also built a drag-and-drop integrated development and operation portal to shield the underlying system details and reduce user usage thresholds."

In general, Angel's positioning is the benchmark Spark. Jiang Jie claims that it combines the advantages of Spark and Petuum. "In the past, Spark was able to run. Now Angel is dozens of times faster. Before Spark couldn't run, Angel could easily run out."

In fact, Angel is already the third generation big data computing platform of Goose Factory.

The first generation was based on Hadoop's deep-customized version "TDW", which focused on "scale" (expanding cluster size).

The second generation integrates Spark and Storm, with an emphasis on speed and "real-time".

Angel, the third-generation self-developed platform, can handle very large-scale data, with a focus on “intelligenceâ€, which is optimized for machine learning.

The evolution of these three generations of platforms, from the use of third-party open source platforms to independent research and development, covers from data analysis to data mining, from data parallelism to model parallel development. Angel now supports GPU operations, as well as unstructured data such as text, voice, and images. Since its launch in the Goose Factory earlier this year, Angel has been applied to accurate recommendation services such as Tencent Video, Tencent Social Advertising and User Image Mining. In addition, the opening up of the domestic Internet industry is about to create a "platform" and "ecological" atmosphere. The Goose Factory is also a perfect inheritance: "Angel is not only a platform for parallel computing, but also an ecological" - although this is the case Not tolerant, but Tencent’s big data ambitions are evident.

On the evening of December 18, Ma Huateng wrote in the WeChat circle of friends: “AI and big data will become the standard in future fields, and we look forward to more industry peers working together to open source and help each other.â€

But for the machine learning community, is the meaning of Angel open source as big as the Goose Factory claims?

In this regard, the machine learning industry's "net red", Microsoft researcher Peng Hesen said:

"For smaller companies and organizations, Spark and even MySQL are enough (for political correctness, I mention PostgresQL); and for companies that are really bigger than Angel, such as Alibaba, they have already developed themselves. Big data processing platform."

Therefore, he concluded that Angel's release is a "very embarrassing time and market positioning."

Peng Hesen

Compared with Baidu PaddlePaddle, Angel has a big difference: it serves the enterprise with big data processing needs, not the individual developer. Unfortunately, because Angel has not yet officially opened source, big data and machine learning peers can't comment on it. All current information comes from the official promotion of the Goose Factory. Regarding the impact of Angel's open source code in the industry, please pay attention to follow-up reports.

Finally, let's take a look at Jiang Jie's official summary of Angel's open source meaning:

"As an important category of artificial intelligence, machine learning is in the early stage of development. Open source Angel is the open experience and advanced technology of Tencent's massive data processing in 18 years. We connect all connected resources, stimulate more creativity, and make this a good platform. Gradually transform into a valuable ecosystem that makes business operations more efficient, products smarter, and better user experience."

3. Alibaba: DTPAI with a half-faced face

But when it comes to the platform, you can't help mentioning Ali.

Compared with Baidu, Ali's AI strategic layout looks more "pragmatic": mainly relying on Alibaba Cloud computing, a series of AI tools and services close to the Taobao ecosystem, such as Ali Xiaomi. The basic research started late, and it was lower than Baidu and Goose. The big event of the 2016 Ali AI strategy was the Yunqi Conference on August 9. Ma Yun personally released the artificial intelligence ET, and its predecessor was Ali “Little Aiâ€. Combining current information, Ali wants to make ET a multi-purpose AI platform: for voice, image recognition, urban computing (traffic), enterprise cloud computing, "new manufacturing", medical health, etc., people can't help but think of it IBM Watson. In the words of Ali, ET will become "global intelligence."

However, in terms of open source projects, what layout does Ali have (Ma Yun likes to use such words)?

The answer is a surprise and disappointment.

The good news is that Ali announced the data mining platform DTPAI (full name: Data technology, the Platform of AI, data technology - artificial intelligence platform) as early as 2015.

The bad news is that there has been no movement since then.

At that time, in August 2015, Ali announced that it would provide Alibaba Cloud customers with paid data mining service DTPAI. Of course, the release of it will inevitably talk about the "ecology" and "platform" - claiming that DTPAI is "China's first artificial intelligence platform." The style is set quite high.

What are the characteristics?

First, DTPAI will integrate Alibaba's core algorithm library, including feature engineering, large-scale machine learning, deep learning, and more. Secondly, like Baidu and Tencent, Ali also attaches great importance to the ease of use of its products. Wei Xiao, Ali ODPS and iDST product manager, said that DTPAI supports programming visualization of mouse drag and drop, and also supports model visualization; and widely interfaces with open source technologies such as MapReduce, Spark, DMLC, and R.

If this is the case, an Aliyun cloud data mining tool will not appear in this article. What we are really interested in is that Ali said that DTPAI "provides a general deep learning framework in the future, and its algorithm library will be open to the society in the later period."

Well, the information about DTPAI ends here. Seriously, after 2015, it has no news anymore. Is Alibaba Cloud a monkey or a big move? We only have to walk.

4. Shan Shiguang: The only remaining SeetaFace in mainland academic circles

Take a look at BAT's open source platform plan and look at a project that began in academia. Unlike the current situation of foreign AI academic circles, most people have never heard of the machine learning open source project that started in the domestic academic circles. This is almost a blank one - it is "almost" because there is a teacher from the Institute of Computing Technology of the Chinese Academy of Sciences. Developed face recognition engine SeetaFace.

Mr. Shan is one of the academic big cattle in the AI ​​world in China. He started his business in the second half of 2016 and publicized SeetaFace shortly after starting his business. Mr. Shan’s research team said that the open source SeetaFace is because “there is no single open face reference face recognition system in the field that includes all technical modulesâ€. And SeetaFace will be free for academics and industry, and is expected to fill this gap.

SeetaFace is based on C++ and does not depend on any third-party library functions. As a fully automatic face recognition system, it integrates three core modules: SeetaFace DetecTIon, SeetaFace Alignment and Face Feature Extraction and Alignment Module (SeetaFace IdenTIficaTIon) ).

The system runs with a single Intel i7 CPU and successfully reduces the hardware threshold for face recognition. Its open source, companies and laboratories that are expected to help a large number of face recognition missions, access SeetaFace in their product offerings, significantly reducing development costs.

Pvc Wire,Aluminium Core Wire,Aluminium Core Pvc Insulation Wire,Aluminium Conductor Grounding Wire

Baosheng Science&Technology Innovation Co.,Ltd , https://www.bscables.com